Google Chrome has a little egg in it. When you don't have a network, opening a random URL will bring up a Dino Run. Press the space bar to jump. Of course, just open chrome://dino to play this mini-game. Recently, Ravi Munde, a graduate student from Northeastern University (USA), used reinforcement learning to control Dino Run.

The following content from the Ravi Munde blog, artificial intelligence headline compilation:

This article will start with the foundation of reinforcement learning and detail the following steps:

Build bidirectional interfaces between browsers (JavaScript) and models (Python)

Capture and preprocess images

Training model

Evaluation

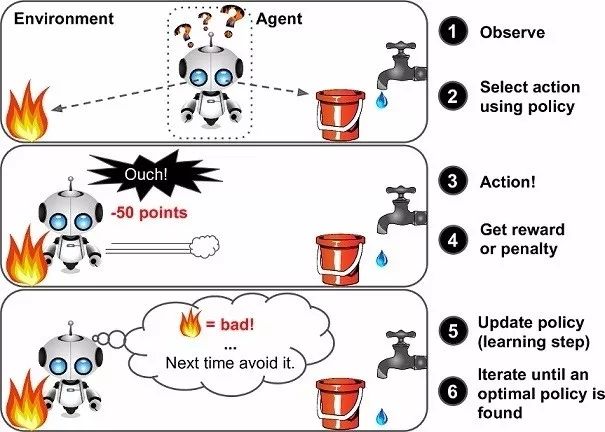

Reinforcement learning

For many people, reinforcement learning may be a new word, but in fact children learn to use the concept of reinforcement learning (RL), which is how our brains still work. The reward system is the basis of any RL algorithm. Just like a child's toddler step, positive rewards will come from parents' applause or candy, while negative rewards will be no candy. Children learn to stand up before they start walking. In terms of artificial intelligence, the main objective of the agent (Dino in our case) is to maximize a certain digital reward by performing a specific sequence of operations in the environment. The biggest challenge in the RL is the lack of oversight (marking data) to guide the agent, which must explore and learn on its own. Agents start with random actions, observe the rewards of each action, and learn how to predict the best action when facing similar environmental conditions.

Caption: Vanilla Reinforcement Learning Framework

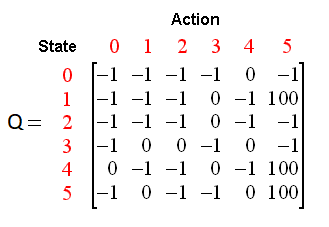

Q-learning

We use Q-Learning (a kind of RL) to try to approximate a special function that can drive the action selection strategy of any sequence of environmental states. Q-Learning is a modelless implementation of RL that updates the Q-table for each state, action taken, and reward received. It allows us to understand the structure of the data. In our example, the status is a screenshot, action, motionless, jump [0,1] of the game.

We solve this problem by regression and choose the action with the highest predicted Q value.

Legend: Q-table sample

▌ Settings

First set the environment:

1, select the virtual machine

We need a complete desktop environment where we can capture and use screenshots to train the model. I chose the Paperspace ML-in-a-box (MLIAB) Ubuntu image. The advantage of MLIAB is that it comes preloaded with Anaconda and many other ML libraries.

2. Set up and install Keras to use the GPU

Paperspace's virtual machine is pre-installed. If not, you can do the following:

Pip install keraspip install tensorflow

In addition, in order to ensure that the GPU can be set to identify, execute the following python code, and you should see the available GPU devices:

From keras import backend as KK.tensorflow_backend._get_available_gpus()

3, install Dependencies

Selenium:

Pip install selenium

OpenCV:

Pip install opencv-python

Download Chromedrive:

Http://chromedriver.chromium.org

▌ game framework

Open chrome://dino and press the spacebar to play this game. If you need to modify the game code, you need to extract the game from chromium's open source library.

Since this game is written in JavaScript and our model is written in Python, we need to apply some interface tools.

Selenium is a popular browser automation tool for sending operations to the browser and obtaining different game parameters such as the current score.

After we have an interface for sending operations, we also need a mechanism to capture the game screen:

Selenium and OpenCV provide the best performance for screen capture and image preprocessing, achieving frame rates of 6-7 fps.

Game Module

We use this module to implement the interface between Python and JavaScript. The following code will let you know how the module works:

Class Game: def __init__(self): self._driver = webdriver.Chrome(executable_path = chrome_driver_path) self._driver.set_window_position(x=-10,y=0) self._driver.get(game_url) def restart(self): Self._driver.execute_script("Runner.instance_.restart()") def press_up(self): self._driver.find_element_by_tag_name("body").send_keys(Keys.ARROW_UP) def get_score(self): score_array = self._driver .execute_script("return Runner.instance_.distanceMeter.digits") score = ''.join(score_array). return int(score)

Agent module

We use an agent module to encapsulate all interfaces. We use this module to control Dino and get the status of the agent in the environment.

Class DinoAgent: def __init__(self,game): #takes game as input for taking actions self._game = game; self.jump(); #to start the game, we need to jump once def is_crashed(self): return self ._game.get_crashed() def jump(self): self._game.press_up()

Game State Module

In order to send the action to the module and get the corresponding result state, we use the Game-State module. It simplifies the process by receiving and executing actions, determines rewards, and returns to an experience tuple.

Class Game_sate: def __init__(self,agent,game): self._agent = agent self._game = game def get_state(self,actions): score = self._game.get_score() reward = 0.1 #survival reward is_over = False # Game over if actions[1] == 1: #else do nothing self._agent.jump() image = grab_screen(self._game._driver) if self._agent.is_crashed(): self._game.restart() reward = -1 is_over = True return image, reward, is_over #return the Experience tuple

▌ Image channel

Image capture

We can capture the game screen in a variety of ways, such as using the PIL and MSS python libraries to capture the entire screen and crop the Region of Interest (ROI). However, the biggest drawback of this method is the sensitivity to screen resolution and window position. Fortunately, the game uses HTML Canvas and we can use JavaScript to easily get images in base64 format. Now we use selenium to run this script.

#javascript code to get the image data from canvasvar canvas = document.getElementsByClassName('runner-canvas')[0];var img_data = canvas.toDataURL()return img_data

Def grab_screen(_driver = None): image_b64 = _driver.execute_script(getbase64Script) screen = np.array(Image.open(BytesIO(base64.b64decode(image_b64)))) image = process_img(screen)#processing image as required return image

Image Processing

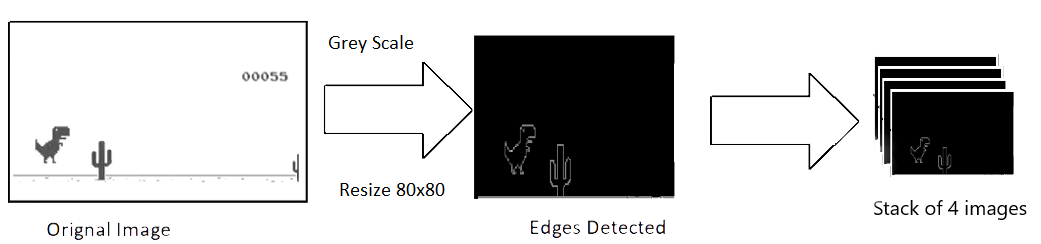

The captured original image has a resolution of 600x150 and has 3 channels (RGB). We intend to use 4 consecutive screen shots as a single input to the model, which allows us to have a single input size of 600x150x3x4. The input is too large, requires a lot of computing power, and not all features are useful, so we use the OpenCV library to adjust, crop, and process the image. The final processed input is only 80x80 pixels and is a single gray scale.

Def process_img(image): image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) image = image[:300, :500] return image

Legend: Image Processing

Model Architecture

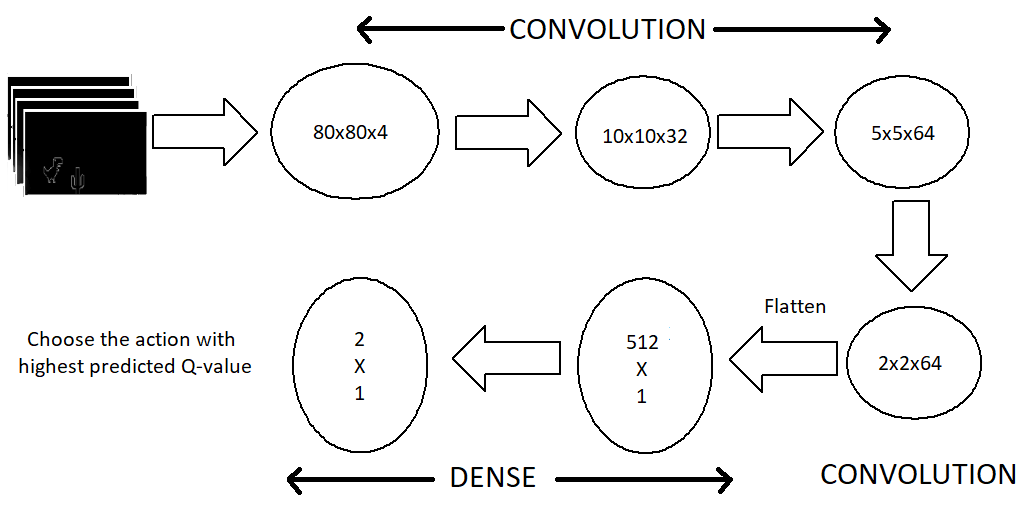

Let us now look at the model architecture. We use a series of three convolution layers and then flatten them into a dense layer and an output layer. The model for the CPU does not include the pooling layer because I have removed many features and adding a pooling layer can result in a large loss of already sparse features. But with the GPU, our model can accommodate more features without reducing the frame rate.

The largest pooled layer significantly improves the processing of dense feature sets.

Legend: Model Architecture

The output layer consists of two neurons, each representing the maximum predicted return for each action. Then we choose the action with the greatest return (Q value).

Def buildmodel():

Print("Now we build the model")

Model = Sequential()

Model.add(Conv2D(32, (8, 8), padding='same', strides=(4, 4), input_shape=(img_cols, img_rows, img_channels))) #80*80*4

Model.add(MaxPooling2D(pool_size=(2,2)))

Model.add(Activation('relu'))

Model.add(Conv2D(64, (4, 4), strides=(2, 2), padding='same'))

Model.add(MaxPooling2D(pool_size=(2,2)))

Model.add(Activation('relu'))

Model.add(Conv2D(64, (3, 3), strides=(1, 1), padding='same'))

Model.add(MaxPooling2D(pool_size=(2,2)))

Model.add(Activation('relu'))

Model.add(Flatten())

Model.add(Dense(512))

Model.add(Activation('relu'))

Model.add(Dense(ACTIONS))

Adam = Adam(lr=LEARNING_RATE)

Model.compile(loss='mse',optimizer=adam)

Print("We finish building the model")

Return model

Training

Start with rest and get the initial state (s_t)

The number of observation steps

Predict and perform operations

Storage experience in Replay Memory

Randomly select a batch from Replay Memory and train the model on this basis

Start again after the game is over

Def trainNetwork(model,game_state):

# store the previous observations in replay memory

D = deque() #experience replay memory

# get the first state by doing nothing

Do_nothing = np.zeros(ACTIONS)

Do_nothing[0] =1 #0 => do nothing,

#1=> jump x_t, r_0, terminal = game_state.get_state(do_nothing) # get next step after performing the action

S_t = np.stack((x_t, x_t, x_t, x_t), axis=2).reshape(1,20,40,4) # stack 4 images to create placeholder input reshaped 1*20*40*4

OBSERVE = OBSERVATION epsilon = INITIAL_EPSILON t = 0 while (True): #endless running

Loss = 0

Q_sa = 0

Action_index = 0

R_t = 0 #reward at t

A_t = np.zeros([ACTIONS]) # action at t

q = model.predict(s_t)

#input a stack of 4 images, get the prediction

max_Q = np.argmax(q)

# chosing index with maximum q value

Action_index = max_Q

A_t[action_index] = 1

# o=> do nothing, 1=> jump

#run the selected action and observed next state and reward

X_t1, r_t, terminal = game_state.get_state(a_t)

X_t1 = x_t1.reshape(1, x_t1.shape[0], x_t1.shape[1], 1) #1x20x40x1

S_t1 = np.append(x_t1, s_t[:, :, :, :3], axis=3) # append the new image to input stack and remove the first one

D.append((s_t, action_index, r_t, s_t1, terminal))# store the transition

#only train if done observing; sample a minibatch to train on

trainBatch(random.sample(D, BATCH)) if t > OBSERVE else 0

S_t = s_t1

t += 1

Please note that we are sampling 32 random experience replays from replay memory and use batch training. The reason for this is the unbalanced distribution of actions in the game structure and the avoidance of overfitting.

Def trainBatch(minibatch): for i in range(0, len(minibatch)):

Loss = 0

Inputs = np.zeros((BATCH, s_t.shape[1], s_t.shape[2], s_t.shape[3])) #32, 20, 40, 4

Targets = np.zeros((inputs.shape[0], ACTIONS))

#32, 2

State_t = minibatch[i][0] # 4D stack of images

Action_t = minibatch[i][1] #This is action index

Reward_t = minibatch[i][2] #reward at state_t due to action_t

State_t1 = minibatch[i][3] #next state

Terminal = minibatch[i][4] #wheather the agent died or survided due the action

Inputs[i:i + 1] = state_t

Targets[i] = model.predict(state_t) #predicted q values

Q_sa = model.predict(state_t1)

#predict q values ​​for next step

If terminal:

Targets[i, action_t] = reward_t # if terminated, only equals reward

Else:

Targets[i, action_t] = reward_t + GAMMA * np.max(Q_sa)

Loss += model.train_on_batch(inputs, targets)

result

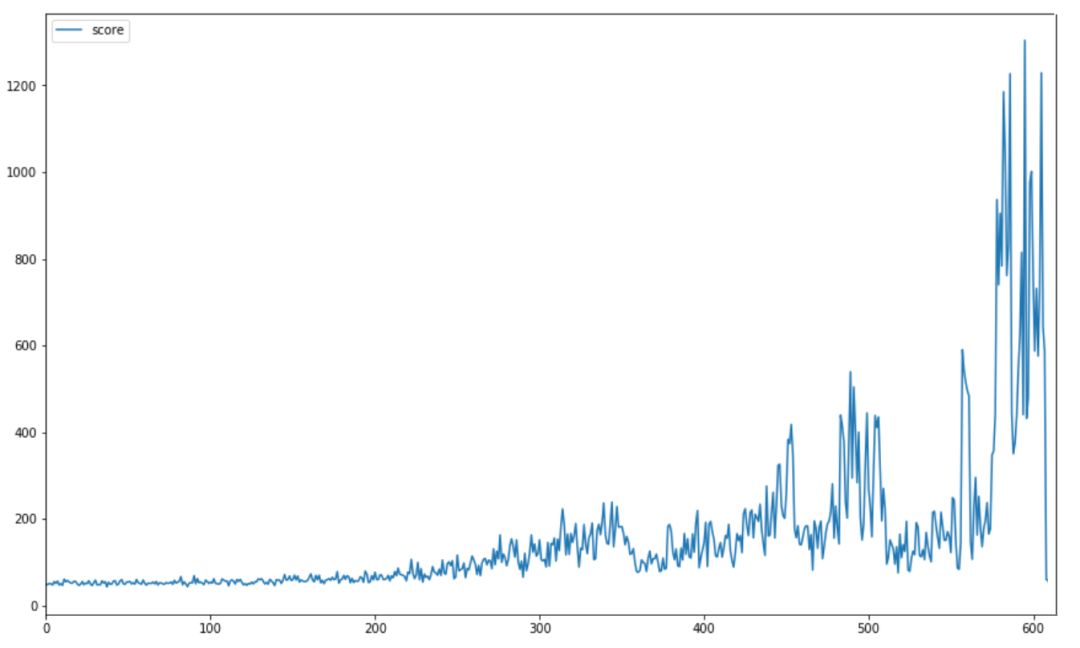

We have achieved good results by using this architecture. The graph below shows the average score at the beginning of training. At the end of training, the average score for every 10 games is much higher than 1000.

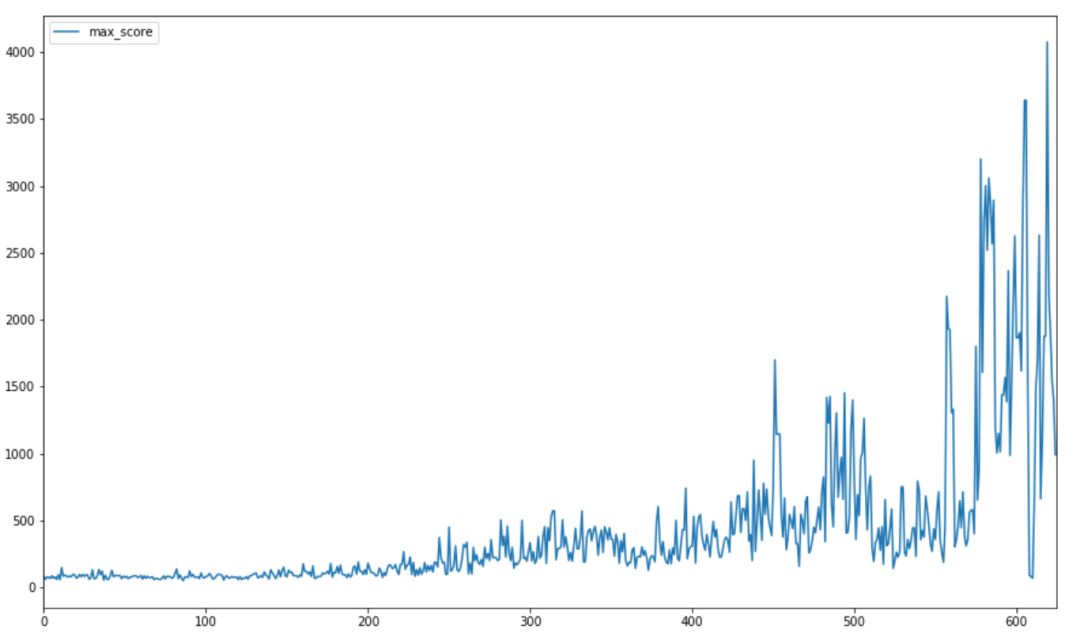

The highest score record is 4000+, which is far more than the 250 points of the previous model (and far exceeds what most people can do!). The figure below shows the progress of the game's highest score during training.

Dino's speed is proportional to the score, which makes it more difficult to detect and determine an action at higher speeds. Therefore, the entire game is trained at a constant speed.

Emphasis: Because of the market chaos and the cheating of bad dealers, most people simply don't understand USB 3.0 and USB 3.1. USB 3.1 Gen1 is USB 3.0. And USB 3.1 Gen2 is the real USB 3.1. The maximum transmission bandwidth of USB 2.0 is 480 Mbps (i.e. 60MB/s), USB 3.0 (i.e. USB 3.1 Gen1) is 5.0 Gbps (500MB/s), and USB 3.1 Gen 2 is 10.0 Gbps (although the nominal interface theoretical rate of USB 3.1 is 10Gbps), but it also retains some bandwidth to support other functions, so it has a good performance. The actual effective bandwidth is about 7.2 Gbps. USB 2.0 is a four-pin interface, and USB 3.0 and USB 3.1 are nine-pin interfaces.

USB 3.1 is the latest USB specification, which was initiated by big companies such as Intel. Compared with the existing USB technology, the new USB technology uses a more efficient data encoding system and provides more than twice the effective data throughput (USB IF Association). It is fully downward compatible with existing USB connectors and cables.

USB 3.1 is compatible with existing USB 3.0 software stacks and devices

USB3.1 LOGO

USB3.1 LOGO

Protocol, 5Gbps hubs and devices, USB 2.0 products.

Intel, which owns Thunderbolt technology, also welcomes the formation of the USB 3.1 standard. USB 3.1 contains most of the features of USB 3.0 [2]. USB 3.1, as the next generation of USB transmission specifications, is commonly referred to as "SuperSpeed+", which will replace USB 3.0 in the future. [3]

USB 3.1 C SMT FINISHED,DIP USB3.1 Plug,Vertical USB Connector,USB Type-c Receptacles Shell

ShenZhen Antenk Electronics Co,Ltd , https://www.atkconnectors.com