An embedded system is a device that runs in a limited space and limited resources to efficiently implement a certain specific function or set of functions.

Its development is usually restricted by many objective conditions, such as weaker CPU processing power, smaller memory space, fewer peripherals to choose from, and limited power supply. The development of each embedded system is all about careful planning, in order to maximize the effectiveness with limited resources. Among the operating systems running on various embedded systems, embedded Linux is gaining more and more attention due to its free, high reliability, extensive hardware support, and open source. Its open source code feature allows developers to modify the Linux kernel for specific embedded systems to meet development requirements and achieve system optimization. A big problem in embedded Linux applications is the real-time nature of Linux. Real-time systems must respond correctly to external events within a limited time, focusing on meeting sudden and temporary processing needs. As a traditional time-sharing operating system, Linux focuses more on the overall data throughput of the system. How to improve the real-time performance of Linux is a challenge facing the majority of embedded system-level developers.

1 Related researchThere are various Linux distributions on the market, but strictly speaking, Linux refers to the kernel maintained by Linus Torvalds (and released through main and mirror websites). No special kernel is needed to build an embedded system. An embedded Linux system just represents an embedded system based on the Linux kernel. The Linux mentioned later in this article refers to the Linux kernel. A lot of work is currently underway to improve the real-time performance of Linux. The latest version of Linux 2.6 has implemented preemptible kernel task scheduling, but the problem of uncertain interrupt delay has not been solved. That is to say, although the Linux high-priority kernel space process of version 2.6 can preempt the system resources of the low-priority process as in user space, the time from the start of the interrupt to the execution of the first instruction of the interrupt service routine is uncertain. .

In addition to the improvement work of Linux developers, some organizations and companies have done a lot of work to improve the real-time performance of Linux. The representative ones are RT-Linux from Fsm Labs, MontaVista Linux from Monta Vista, and RTAI (Realtime ApplicaTIon InteRFace) project maintained by Paolo Mantegazza and others. The methods used in these projects can be grouped into two categories:

(1) Modify the Linux kernel directly. MontaVista Linux uses this method. It modified Linux into a preemptible kernel called Relaxed Fully Preemptable Kernel, and realized real-time scheduling mechanisms and algorithms, and added a fine-grained timer, thus modifying Linux into a soft real-time kernel.

(2) "Dual core" mode. The RTAI project and RT-Linux adopted this approach. This method "overheads" the traditional Linux as a task with the lowest priority of the newly added small real-time kernel, and the real-time task is executed as the task with the highest priority. That is, the implementation task is run in the presence of real-time tasks, otherwise the tasks of Linux itself are run.

The limitation of MontaVista and RT-Linux is that it is a commercial software and does not follow the GNU open source principle. If you want to use this kind of Linux in the system, you need to pay a considerable license fee, which goes against the original intention of using Linux-open source, free, and able to develop your own intellectual property rights.

RTAI has abandoned many inherent advantages of Linux for real-time performance: extensive support for a large number of hardware, excellent stability and reliability. On the one hand, developers have to rewrite drivers for a hardware abstraction layer RTHAL (Real TIme Hardware AbstracTIon Layer) customized by RTAI, and the results of the huge Linux development community cannot be easily applied to the real-time core.

2 Factors affecting Linux real-time performance2.1 Task switching and its delay

Task switching delay is the time required for Linux to switch from one process to another, that is, the interval between the high-priority process from issuing the CPU resource request to the execution of the first instruction of the process. In a real-time system, the task switching delay must be as short as possible. As mentioned before, Linux 2.6.X has implemented a preemptible kernel. High-priority kernel space processes can let the CPU stop low-priority processes at any time and execute itself as in user space. But there are 2 exceptions:

(1) The process cannot be preempted by other processes when executing in the critical section (Critical Section);

(2) Interrupt Service Routine (Interrupt Service Routine) cannot be preempted by other processes.

2.2 Priority-based scheduling algorithm

In Linux 2.6, O(1) scheduling algorithm is adopted. It is a priority-based preemptive scheduler, which assigns a unique priority to each process. The scheduler guarantees that among all the tasks waiting to be run, the first task to be executed is always the high-priority task, for which the high priority Level tasks can preempt low-priority tasks.

This scheduler has constant overhead and has nothing to do with the current system overhead, which can improve the real-time performance of the system. However, the scheduling system does not provide resources other than CPU deprivation operation, and the real-time performance has not been fundamentally improved. If two tasks need to use the same resource (such as a cache), the high-priority task is ready, and the low-priority task is using this resource at the moment, the high-priority task must wait until the low-priority task It can only be executed after the resource is released. This is called priority inversion.

2.3 Interrupt delay, interrupt service routine

Interrupt delay refers to the time interval from when the peripheral sends an interrupt signal to when the first instruction of the ISR starts to execute. The real-time task demand caused by external interrupts is the main component of the real-time system processing capacity. Fast enough interrupt response and rapid interrupt service program processing are important performance indicators to measure the real-time system. The execution time of different ISRs is different. Even the same ISR may have different execution times because of multiple exits. When the ISR is executed, the external interrupt is disabled, causing such a situation, even if the interrupt delay of Linux is very small, if a peripheral generates an interrupt signal when an ISR is executed, because of the running time of the ISR being executed Uncertainty and non-preemption will also produce unpredictability of Linux interrupt delay.

3 Improvement of system real-time performance3.1 The establishment of task switching machine

In section 2.1, the problem that the process cannot be preempted when executing in the critical section is mentioned. In order not to affect system stability and reduce the time of debugging and testing, we do not intend to modify this, but introduce a mechanism to ensure that real-time tasks can Get priority execution. That is, in a real-time system, only when the critical area of ​​the process can end before the start of the next real-time task is allowed to enter.

How to judge the generation time of the next real-time task interrupt signal, generally speaking, the interrupt signal is set for tasks whose start time is unpredictable, and its generation is completely random. In order to make the time of the interrupt signal predictable, the generation of the interrupt signal is linked to the clock interrupt: the interrupt signal can only be generated at the same time as the clock interrupt. The clock interrupt is generated by the system timing hardware at periodic intervals. This interval is set by the kernel according to the Hz value. Hz is a constant related to the architecture and is defined in the file. The Hz value defined by current Linux for most platforms is 100, that is, the clock interrupt cycle is 10ms. Obviously this cannot meet the timing accuracy requirements of the real-time system. Increasing the Hz value can improve system performance, but at the cost of increasing system overhead. This must carefully weigh the balance between real-time requirements and system overhead. One method is to determine the time interval between the occurrence of real-time task interrupt requests and the execution time of the process in the critical region through a large number of tests, and take a value slightly larger than the interrupt interval of most real-time tasks and the execution time of the critical region.

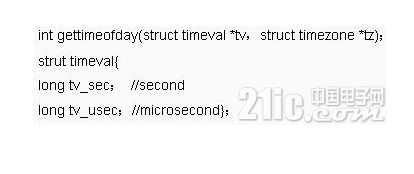

Linux provides some mechanisms to allow us to calculate the execution time of a function, and the gettimefoday() function is one of them. The prototype of the function and a data structure to be used are as follows:

Among them, gettimeofday() saves the current time in the tv structure, tz generally does not need to be used, and can be replaced by NULL. Examples of usage are as follows:

In this way, the time spent by the process in the critical section function_in_critical_section() can be obtained for reference. Set the Hz value to 2000, the system clock interrupt cycle is 0.5ms at this time, and the accuracy is increased by 20 times.

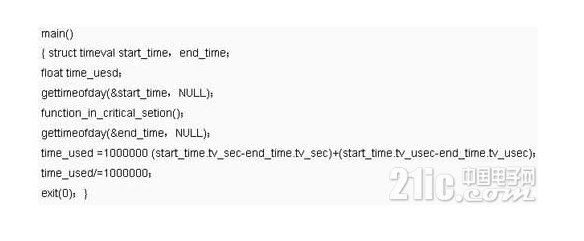

As shown in Figure 1 and Figure 2, before the process enters the critical region, it compares its own average execution time T (NP) and T (REMAIN) values. When T (NP) ≤ T (REMAIN), the process only It is allowed to enter the critical area, otherwise the process enters the work queue and waits for the next judgment.

This article attempts to use mathematical methods to analyze the improvement of real-time performance using this mechanism. First, a definition is given: when the real-time task scheduled to be executed at time t is postponed to time t', then t'-t is called the system delay, which is represented by Lat (OS). In ordinary Linux, Lat (OS) is as follows:

Lat(OS)=T(NP)+T(SHED)

Assuming that at any time, the probability of T(NP)≤T(REMAIN) is Ï, then the average Lat(OS) in ordinary Linux is

AvLat(OS)=Ï[T(NP)+T(SHED)] +(1-Ï)ï¼»T(NP)+ 2T(SHED)]

After the introduction of the aforementioned mechanism, since the priority is always to ensure the execution of real-time tasks, the fixed type of Lat (RT-OS) is:

Lat (RT-OS) = T (SHED)

The change of system delay before and after adopting this mechanism is

δ=AvLat(NOR-OS)-Lat(RT-OS)=T(NP)+(2-Ï)T(SHED)

In a specific system, Ï is fixed, and in Linux 2.6, T (SHED) is also fixed after the O(1) algorithm is adopted. From the previous formula, it can be concluded that the process in the critical section takes a long time to execute. , The greater the average system delay before and after the introduction of this mechanism, the more obvious the improvement of the system's real-time performance.

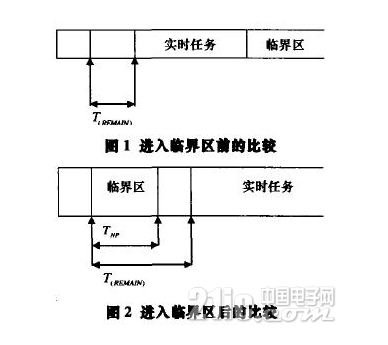

3.2 Priority measure

Try to describe a scenario as follows: low-priority tasks L and high-priority H tasks need to occupy the same shared resource. Soon after the low-priority task starts, the high-priority task is also ready. After finding that the required shared resources are occupied, the task H is suspended, waiting for the end of task L to release the resource. At this time, a medium-priority task M that does not require the resource appears, and the scheduler switches to execute task M according to the priority principle. This further increases the waiting time of task H, as shown in Figure 3. The worse situation is if there are more similar tasks M0, M1, M2. .., it is possible that task H misses the critical deadline (Critical Deadline), which may cause the system to crash.

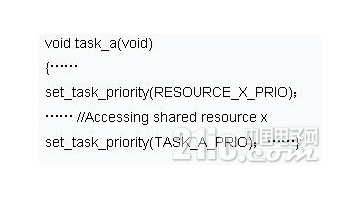

In a less complex real-time system, this problem can be solved by using the method of putting on top of priority. This scheme assigns a priority to each resource that may be shared, which is the priority of the highest priority process that may use this resource (RESOURCE_X_PRIO in the pseudo code below). The scheduler passes the priority to the process that uses the resource, and its own priority (TASK_A_PRIO in the pseudo code below) returns to normal after the process ends. In this way, it is avoided that task L is preempted by task M in the above scenario, and task H is always in a suspended state. The sample code for the top priority is as follows:

3.3 Kernel threads

The interrupt service routine (ISR) cannot be preempted. Once the CPU starts executing ISR, it is impossible to switch to other tasks unless the program ends. Linux uses a spinlock to realize the monopoly of ISR on the CPU. The ISR with the spin lock cannot go to sleep, and the interruption of the system is also completely prohibited at this time. Kernel threads are created and revoked by the kernel to execute a specified function. The kernel thread has its own kernel stack and can be called individually. We use kernel threads instead of ISR, and use mutex (Mutex) to replace the spin lock. Kernel threads can enter sleep, and external interrupts are not disabled during execution. After the system receives the interrupt signal, it wakes up the corresponding kernel thread, and the kernel thread replaces the original ISR and continues to enter the dormant state after performing the task. In this way, the interruption of court time is predictable and takes little time.

According to the test data of LynuxWorks, on a Pentium III 1GHz PC, the average task response time of the Linux 2.4 kernel is 1133us, and the average interrupt response time is 252us; while the average response time of the Linux 2.6 kernel is 132us, and the average interrupt response time is only 14us, which is an order of magnitude higher than the Linux 2.4 kernel. On this basis, using this method can further speed up the response time of a specific interrupt for a specific system and improve the real-time performance of the application system.

4 Summary and outlookBased on Linux 2.6, this article discusses ways to improve the real-time performance of Linux. Introduced in the real-time system, only when the process entering the critical section can be completed before the start of the next real-time task is allowed to execute the mechanism, to ensure that real-time tasks are always executed first; the method of putting the priority on the top avoids the occurrence of priority The situation of level inversion; the use of kernel threads instead of interrupt service routines has changed the situation that the general interrupt service routine cannot enter the dormant state during execution, and external interrupts are not disabled during execution, making the interruption time of the system short and predictable. The disadvantage of the method described in this article is that increasing the system clock interrupt frequency band will increase the system overhead. In order to find a balance between real-time performance improvement and system overhead increase, developers have to do a lot of tests on specific systems, and specific analysis of specific problems, which makes this method compromised in applicability. Linux is bound to be widely used in the field of embedded systems because of its free, powerful performance, and numerous tools. We should keep track of the development of Linux at home and abroad in time, accumulate development experience in this field at the same time, and go our own way.

HOT SALE,All In One Pc,All In One Pc Gaming,All In One Pc As Monitor,All In One Pc For Business

Guangzhou Bolei Electronic Technology Co., Ltd. , https://www.nzpal.com